Since the approval of its relevant 802.3bs standard from the IEEE in 2017, 400GbE Ethernet has become the talk of the town. The main reason behind it is the ability of this technology to beat the existing solutions by a mile. With its implementation, the current data transfer speeds will simply see a fourfold increase. Vigorous efforts are being made by the cloud service providers and network infrastructure vendors to pace up the deployment. However, there are a number of challenges that can hamper its effective implementation and hence, the adoption.

In this article, we will have a detailed look into the opportunities and the challenges linked to the successful implementation of 400G Ethernet enterprise network. This will provide a clear picture of the impact this technology will have on large-scale organizations.

Opportunities for 400G Ethernet Enterprise Networks

- Better management of the traffic over video streaming services

- Facilitates IoT device requirements

- Improved data transmission density

How can 400G Ethernet assist enterprise networks in handling growing traffic demands?

Rise of 5G connectivity

Rising traffic and bandwidth demands are compelling the CSPs for rapid adoption of 5G both at the business as well as the customer end. A successful implementation requires a massive increase in bandwidth to cater for the 5G backhaul. In addition, 400G can provide CSPs with a greater density in small cells development. 5G deployment requires the cloud data centers to be brought closer to the users as well as the devices. This streamlines the edge computing (handling time-sensitive data) part, which is another game-changer in this area.

Data Centers Handling Video Streaming Services Traffic

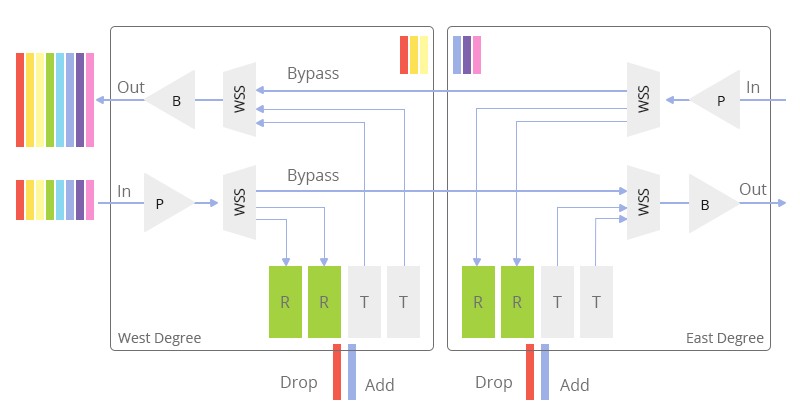

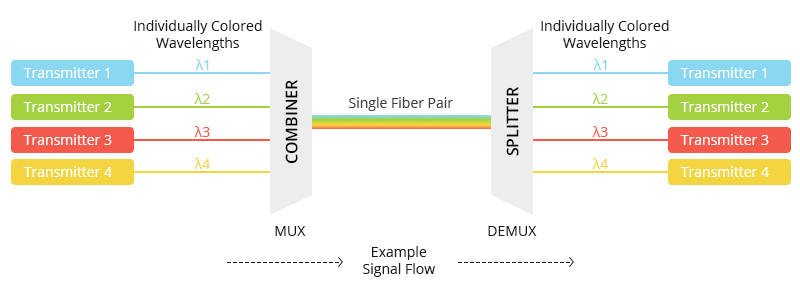

The introduction of 400GbE Ethernet has brought a great opportunity for the data centers working behind the video streaming services as Content Delivery Networks. This is because the growing demand for bandwidth is going out of hand using the current technology. As the number of users increased, the introduction of better quality streams like HD and 4K has put additional pressure on the data consumption. Therefore, the successful implementation of 400GbE would come as a sigh of relief for the data centers. Apart from rapid data transferability, issues like jitter will also be brought down. Furthermore, large amounts of data transfer over a single wavelength will also bring down the maintenance cost.

High-Performance Computing (HPC)

The application of high-performance computing is in every industry sub-vertical whether it is healthcare, retail, oil & gas or weather forecasting. Real-time analysis of data is required in each of these fields and it is going to be a driver for the 400G growth. The combined power of HPC and 400G will bring out every bit of performance from the infrastructure leading to financial and operational efficiency.

Addressing the Internet of Things (IoT) Traffic Demands

Another opportunity that resides in this solution is for the data centers to manage IoT needs. Data generated by the IoT devices is not large; it is the aggregation of the connections that actually hurts. Working together, these devices open new pathways over internet and Ethernet networks which leads to an exponential increase in the traffic. A fourfold increase in the data transfer speed will make it considerably convenient for the relevant data centers to gain the upper hand in this race.

Greater Density for Hyperscale Data Centers

In order to meet the increasing data needs, the number of data centers is also seeing a considerable increase. A look at the relevant stats reveals that 111 new Hyperscale data centers were set up during the last two years, and 52 out of them were initiated during peak COVID times when the logistical issues were also seeing an unprecedented increase. In view of this fact, every data center coming to the fore is looking to setup 400GbE. Provision of greater density in fiber, racks, and switches via 400GbE would help them incorporate huge and complex computing and networking requirements while minimizing the ESG footprint at the same time.

Easier Said Than Done: What Are the Challenges In 400G Ethernet technology

Below are some of the challenges enterprise data centers are facing in 400G implementation.

Cost and Power Consumption

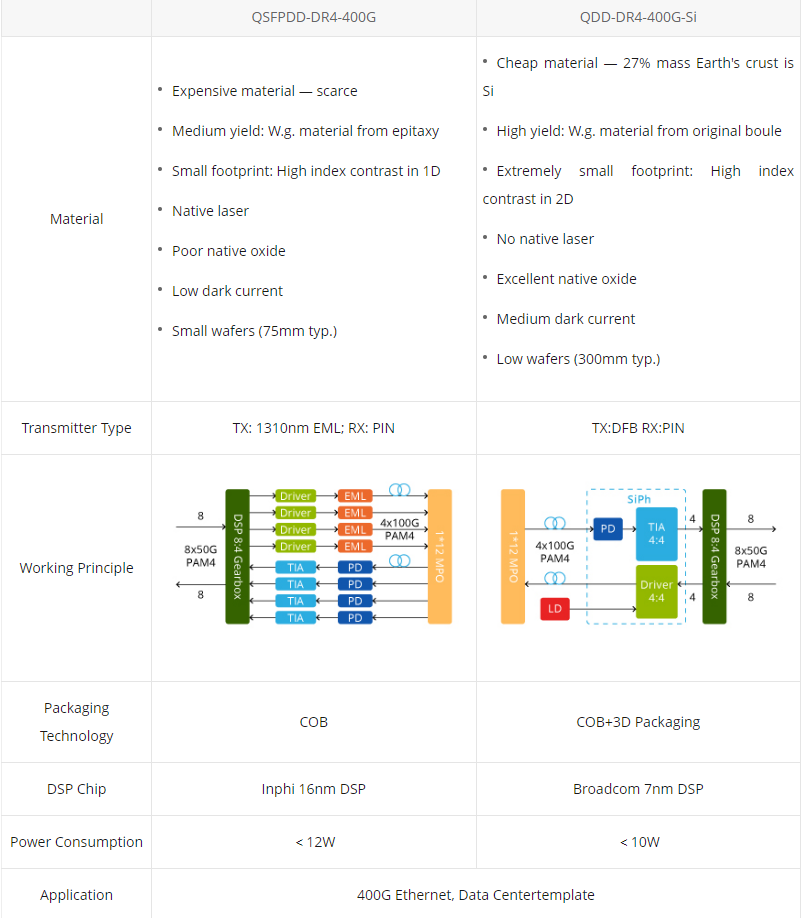

Today’s ecosystem of 400G transceivers and DSP are power-intensive. Currently, some transceivers don’t support the latest MSA. They are developed uniquely by different vendors using their proprietary technology.

Overall, the aim is to reduce $/gigabit and watts/gigabit.

The Need for Real-World Networking Plugfests

Despite the standard being approved by IEEE, a number of modifications still need to be made in various areas like specifications, manufacturing, and design. Although the conducted tests have shown promising results, the interoperability needs to be tested in real-world networking environments. This would outline how this technology is actually going to perform in enterprise networks. In addition, any issues faced at any layer of the network will be highlighted.

Transceiver Reliability

Secondly, transceiver reliability also comes as a major challenge in this regard. Currently, the relevant manufacturers are finding it hard to meet the device power budget. The main reason behind that is the use of a relatively older design of QSFP transceiver form factor as it was originally designed for 40GbE. Problems in meeting the device power budget lead to issues like heating, optical distortions, and packet loss.

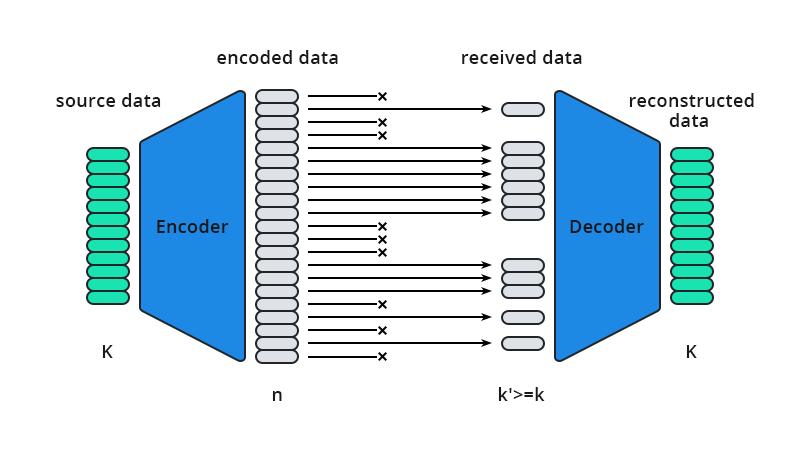

The Transition from NRZ to PAM-4

Furthermore, the shift from binary non-return to zero to pulse amplitude modulation with the introduction of 400GbE also poses a challenge for encoding and decoding. This is because NRZ was a familiar set of optical coding whereas PAM-4 requires involvement of extensive hardware and an enhanced level of sophistication. Mastering this form of coding would require time, even for a single manufacturer.

Greater Risk of Link Flaps

Enterprise use of 400GbE also increases the risk of link flaps. Link flaps are defined as the phenomenon involving rapid disconnection in an optical connection. Whenever such a scenario occurs, auto-negotiation and link-training are performed before the data is allowed to flow again. While using 400GbE, link flaps can occur due to a number of additional reasons like problems with the switch, design problems with the -transceiver, or heat.

Inference

The true deployment of 400GbE Ethernet enterprise network is undoubtedly going to ease management for cloud service providers and networking vendors. However, it is still a bumpy road. With the modernization and rapid advancements in technology, scalability is going to become a lot easier for the data centers. Still, we are still a long way from the destination of a successful implementation. With higher data transfer rates easing traffic management, a lot of risks to the fiber alignment and packet loss still need to be tackled.

Article Source: How 400G Ethernet Influences Enterprise Networks?

Related Articles:

PAM4 in 400G Ethernet application and solutions

400G OTN Technologies: Single-Carrier, Dual-Carrier and Quad-Carrier